Goal

The goal of this lab was to

introduce us to the preprocessing skill of geometric correction. The lab was

structured to develop our skills on the two major types of geometric

correction: Image-to-map and image-to-image rectification through polynomial

transformation.

Objectives:

1.

Use a 7.5 minute digital raster graphic (DRG) image of the

Chicago Metropolitan Statistical Area to correct a Landsat TM image of the same

area using ground control points (GCPs) from DRG to rectify the TM image.

2.

Use a corrected Landsat TM image for eastern Sierra Leone to

rectify a geometrically distorted image of the same area.

Methods

Image-to-Map Rectification

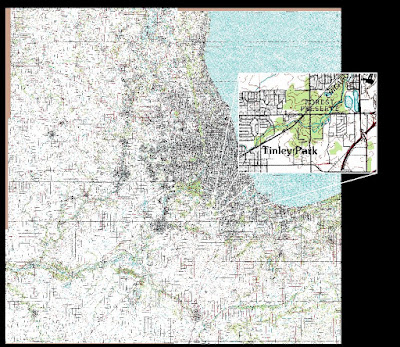

I opened the

provided Chicago.drg.img, which is a USGS 7.5 minute digital raster graphic

covering part of Chicago (see figure 1).

|

Figure 1 This is a USGS 7.5 minute digital raster graphic (DRG)

covering part of the Chicago region and adjacent areas. The subset is to show

detail.

|

To rectify the image Chicago_2000

to the Chicago DRG, I used the Multipoint Geometric

Correction tool under Multispectral/Ground

Control Points in the ERDAS Imagine interface. The Multipoint Geometric

Correction window contains 2 panes. On the left is the input image (Chicago_2000.img),

while the reference image is on the right pane (Chicago_drg.img). Each of

these panes contains three windows. The top left and top right panes show the

entire input and reference images respectively. The other two central top panes

shows the areas that are zoomed into on the input image and also that zoomed

into on the reference image.

See figure two for a close up

view.

|

Figure 2 The Multipoint

Geometric Correction window with the input image (Chicago_2000.img) and

reference image (Chicago_drg.img).

|

I chose four sets of ground

control points (GCPs) to align the aerial image “Chicago_2000.img” with the

reference image (“chicage_drg.img”). Though only three GCPs are necessary for a

first order polynomial, it is wise to collect more than the minimum required

GCPs in geometric correction in order for the output image to have a guaranteed

good fit. Figure 3 displays the Multipoint Geometric Correction window. The

table at the bottom indicates the RMS (Root Mean Square) Error for the

individual points and for the image in total. Notice the RMS error is below

0.5, which means the ground control points are accepted as accurate by industry

standards.

|

Figure 3 The Multipoint Geometric Correction

window after placement of the ground control points. The RMS Error is less than

0.5.

|

For further explanation of the process, Chicago_drg.img served as the

reference map to which we rectified/georeferenced the Chicago_2000 image. Using

points from the reference image, a list of GCPs were created that were used to register the aerial

image to the reference image in a first order transformation. This works to anchor

the aerial image down to a known location; the reference image already had a

known source and geometric model. We then used a computed transformation matrix

to resample the unrectified data.

The

interpolation used was the nearest neighbor method wherein each new pixel in

the output image is assigned to the pixel nearest it in the input image.

Matrices consist

of coefficients that are used in polynomial equations to convert the coordinates

of the input image. A 1st-order transformation was used because the aerial

image was already projected onto a plane but not rectified to the desired map

projection.

Image-to-Image Rectification

Part two involved doing the rectification

process again, this time with two images instead of an image and a map. A third

order polynomial transformation was required because of the extent of the

distortion, and third order transformations require at least 10 GCPs.

For further explanation of higher order

transformations, click here.

|

| Figure 4 The Image-to-image transformation with twelve GCPS. Notice that the total RMS Error is below 0.05. |

Results

Through this laboratory

exercise, I developed skills in Image-To-Map and Image-to-Image rectification

methods of geometric correction. This type of preprocessing is one that is

commonly performed on satellite images before data or information is extracted

from the satellite image. The results of the rectification processes can be

seen below in figures five and six.

|

Figure 5 The image-to-map rectified image of Chicago and the surrounding area.

|

|

Figure 6 My rectified image

compared to the Sierra Leone image that was used as the reference in the

transformation process. The color of my image is washed out, but the

orientation appears accurate.

|

Data sources

Data used in this lab was provided by Dr. Cyril

Wilson and collected from the following sources:

Satellite images are from Earth

Resources Observation and Science Center, United States

Geological

Survey.

Digital raster graphic (DRG) is from Illinois Geospatial

Data Clearing House.