Goal

The purpose of this lab is to work with measurement and

interpretation techniques for spectral reflectance signatures of various

materials in satellite images and to perform basic resource monitoring using

remote sensing band ratio techniques. During this exercise, I collected

spectral signatures from remotely sensed images, graphed them, and performed

analysis on them in order to test spectral separability. This is an important

step in image classification. I also monitored the health of vegetation and

soils using basic band ratio techniques.

The main goal of this lab was to equip me with the necessary

skills to collect and analyze spectral signature curves, specifically in order

to monitor the health of vegetation and soils.

Upon completion of this last

introductory remote sensing lab, the idea is that I am now equipped with the

necessary skills for an entry level remote sensing job, and am also equipped to

work on an independent project to complete this class.

Objectives

1.

Spectral signature analysis

2.

Resource monitoring

·

Vegetation health monitoring

·

Soil health monitoring

·

Prepare a map to show mineral distribution

Part 1: Spectral signature analysis

In this part of the lab, you are going to use a Landsat ETM+

image (taken in 2000) that covers the Eau Claire area and other regions in WI,

and MN to collect and analyze spectral signatures of the following earth

features:

1. Standing Water

2. Moving water

3. Vegetation

4. Riparian vegetation.

5. Crops

6. Urban Grass

7. Dry soil (uncultivated)

8. Moist soil (uncultivated)

9. Rock

10. Asphalt highway

11. Airport runway

12. Concrete surface (Parking lot)

The Field Spectrometer Pro instrument takes reflectance

measurements in the visible, Near-Infrared and Mid-Infrared regions of

electromagnetic spectrum (0.4 – 2.5 μm).

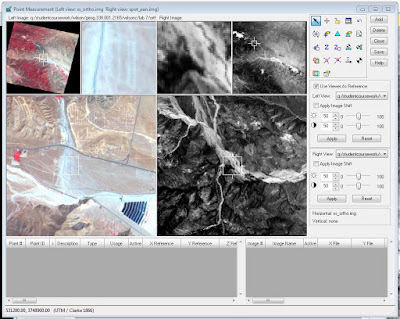

The first spectral signature collected was from Lake Wissota, located in the

north-northeast part of Eau Claire. Using the polygon tool (under

Home>Drawing), I selected an AOI inside Lake Wissota (Fig. 1). I then opened

the Signature Editor (Raster>Supervised>Signature Editor) and graphed the

spectral curve of this standing water signature (Fig. 2). The water curve is

characterized by high absorption at near infrared wavelengths range and beyond,

which is why the signature curve shows such low reflectance at 0.4 and above.

|

Figure 1. The selected area of Lake Wissota near Eau Claire, Wisconsin provided the

spectral signature for the Standing Water category.

|

I continued to select areas from the photo to

represent the other 11 categories, using Google Earth imagery as ancillary data.

The Signature Editor window (Fig.3) displays the other categories and their

details. These were compiled into the Spectral Signature graph (Fig.4).

|

Figure 3. The Signature Editor window containing the

twelve categories.

|

Part 2: Resource monitoring

Section 1: Vegetation health monitoring

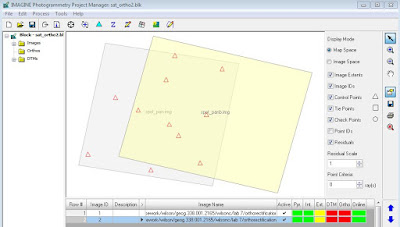

In this section of the lab, I performed a band ratio on the ec_cpw_2000.img image by implementing

the normalized difference vegetation index (NDVI).

Using

the Raster>Unsupervised>NDVI tool to open the Indices

interface, I used the ‘Landsat

7 Multispectral’ sensor to generate an NDVI image. Unfortunately, the ERDAS

software had a malfunction before I could take a screenshot of my NDVI image.

Section 2: Soil health monitoring

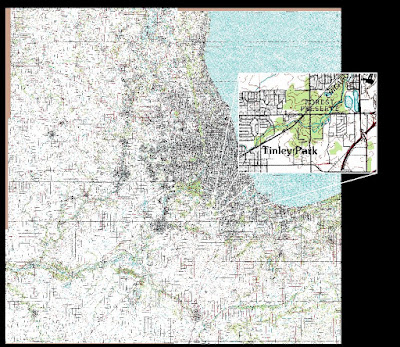

For this final section, I used

simple band ratio by invoking the ferrous mineral

ratio on the ec_cpw_2000.img image (Fig.5) to monitor the spatial distribution

of iron contents in soils within Eau Claire and Chippewa counties (Fig.

6).

|

Figure 5. Eau

Claire and Chippewa Counties in false color. This was the image used in both

parts of Lab 8.

|

|

Figure 6. The final

product was this image depicting Ferrous Mineral deposits in white. Notice that

the distribution of these minerals are on the west side of the Chippewa River.

|

Sources

Data for this lab exercise was provided to the

students of Geography 338: Remote Sensing of the Environment by Dr. Cyril

Wilson, Professor of Geography at University of Wisconsin Eau Claire.

Satellite image is from Earth Resources Observation

and Science Center, United States Geological Survey.